PromptMetheus

What Is Promptmetheus?

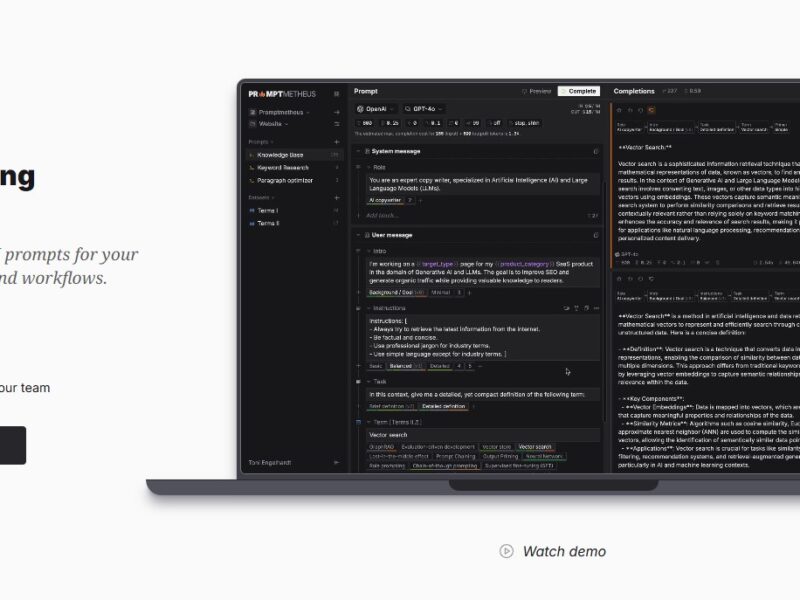

Promptmetheus is an advanced Prompt Engineering IDE (Integrated Development Environment) designed to help developers, AI teams, and prompt engineers build, test, optimize and deploy prompts for large language models (LLMs).

In essence, it’s a tool that goes beyond a basic “enter your prompt into a box” workflow. With Promptmetheus, you can compose prompts in modular blocks, test across multiple models/APIs, track performance, collaborate with teams, and export or deploy your prompt workflows in production.

Features

Here are the core features that make Promptmetheus stand out:

-

Modular Prompt Composition: Prompts are broken into blocks such as Context → Task → Instructions → Samples (shots) → Primer, enabling re‑use, variation testing and structured editing.

-

Multi‑Model & Multi‑API Support: Supports 15 providers and 150+ models (according to the website) – you can test your prompts across many LLMs from providers like OpenAI, Anthropic, DeepMind etc.

-

Prompt Testing & Evaluation Tools: Includes datasets for rapid iteration, visual statistics, completion ratings, and performance analytics so you can evaluate how well each prompt variant works.

-

Traceability & Version History: You can track the entire design history of your prompt variants, view version histories, compare performance over time.

-

Cost / Token / Model Usage Insights: Monitor inference costs, token usage, model latency, and optimize prompts not just for output but for cost/performance.

-

Team Collaboration & Shared Workspaces: For teams, it provides shared workspaces, prompt libraries, and real‑time collaboration tools, making it easier for multiple engineers to develop together.

-

Export/Deployment Options: Prompts and completions can be exported (JSON, CSV, XLSX) and used in production workflows; also supports deploying prompt endpoints (AIPI) in some plans.

Pros & Cons

Pros:

-

Highly structured prompt engineering: The modular “block” system means you can iterate and refine prompts in a systematic, repeatable way, which is a big win for teams working with LLMs.

-

Great model‑agnostic flexibility: Because it supports many models and providers you aren’t locked into one vendor; you can compare different providers and pick the best for your use‑case.

-

Strong collaboration / team support: For organizations, having prompt libraries, shared workspaces, and versioning increases productivity and reduces duplicated effort.

-

Performance / cost visibility: Being able to track token usage, cost, model performance means more discipline in managing prompt engineering as a real production activity—not just one‑off experiments.

-

Export and deployment friendly: Being able to export prompt material and deploy endpoints means you can integrate the results into real apps/workflows beyond a “playground.”

Cons:

-

Learning curve: Because it’s feature‑rich and structured, new users might find the interface and concepts (blocks, variants, datasets, metrics) somewhat complex compared to simpler prompt tools.

-

Cost & savings trade‑off: Although the IDE provides cost insights, you still need to manage the underlying model inference costs yourself (API keys, tokens, etc.); using many large models can still become expensive.

-

Over‑engineering risk: For simple one‑off prompts, the full suite of features might feel like overkill; if you just need “enter prompt, get answer”, something simpler may suffice.

-

Dependency on good data/variants: The system is only as good as the input – if you don’t supply good variants or datasets for testing, you might still end up with mediocre prompt outputs.

-

Production readiness differs by plan: Some of the more advanced features (AIPI endpoints, enterprise deployment) may require higher‑tier plans or extra configuration.

Use Cases:

Promptmetheus is versatile and fits many scenarios, including but not limited to:

-

Prompt Engineering for AI Apps: If you’re building an AI‑powered app (chatbot, assistant, writer tool), you can design the prompts, test them across models, refine and then deploy.

-

LLM Evaluation & Benchmarking: Use it to compare performance of the same prompt across GPT‑4, Claude, Gemini, etc., and select the best model and prompt variant.

-

Team Collaboration on Prompt Libraries: Agencies or teams building LLM‑powered services can maintain a shared library of “best prompts”, update them, version them, and onboard new team members easily.

-

Cost / Token Optimisation: For companies using LLMs heavily, monitoring cost per prompt, token usage, and refining prompts to reduce tokens while preserving output quality can be important; Promptmetheus supports that.

-

Deploying Prompt Endpoints: Once a prompt is refined and stable, you can wrap it as an endpoint (AIPI) and integrate it into your product via API calls rather than manually copying prompt text.

-

Research / Teaching Prompt Engineering: For educational settings or R&D, the system is useful for showing how prompts can be broken down, varied, and improved systematically.

Compared to Other Tools

When assessing Promptmetheus relative to other prompt‑engineering or AI workflow platforms:

-

Versus basic prompt editors/playgrounds: Many tools let you experiment with one model, one prompt, minimal metrics. Promptmetheus provides significantly more infrastructure: modular composition, multi‑model testing, teams, analytics.

-

Versus purpose‑built model‑wrapper platforms: Some platforms focus on deploying a single model or chatbot quickly with minimal prompt control; Promptmetheus focuses more on the engineering side of prompts – refining, versioning, optimizing.

-

Versus team/enterprise prompt management platforms (e.g., PromptLayer, Langsmith): Some competitors emphasise logging, versioning, A/B testing of prompts in production. Promptmetheus seems to combine engineering (IDE) + versioning + deployment. Its advantage is modular prompt design and multi‑model testing. On the flip side, competitors might offer deeper integrations (e.g., full chatbot flows, embeddings, vector stores) whereas Promptmetheus may require pairing with additional tools for full stack.

-

Versus low‑code/no‑code prompt tools: If you’re not a prompt‑engineer and just want “enter text, get answer”, simpler tools might suffice and may be cheaper. Promptmetheus shines when you engineer prompts, iterate, test variants, and need reliability.

-

Cost/complexity trade‑off: Many simpler platforms are cheaper and easier to adopt quickly. Promptmetheus gives more power, flexibility and control—but with that comes complexity and the need to invest in prompt design and dataset preparation.

Promptmetheus is a powerful, professional‑grade platform for prompt engineers, AI teams and developers working seriously with LLMs. If you’re building AI‑powered systems, want to version and optimize prompts, test across multiple models, collaborate in a team and deploy stable endpoints, it offers a compelling environment.

That said, it’s not a plug‑and‑play “simple single prompt” tool for casual users. If your needs are modest (e.g., “just need one prompt for ChatGPT”), you might find it overkill. But for production use, prompt engineering workflows, cost/tokens tracking and deploying to real applications – it holds up well.

Recommendation: If you’re in the AI‑engineering side of things, give Promptmetheus a trial (they have a free playground plan) and test your prompt workflows, compare models, see how much value you get out of the optimization features. If you’re just starting or have minimal prompt needs, evaluate whether a simpler tool could suffice to begin with and upgrade if you start needing more control.

FAQs

1. Is there a free plan for Promptmetheus?

Yes — there is a “Playground” / free plan which allows you to try the basic Prompt IDE with local data storage and OpenAI models.

2. How many models/providers does it support?

Promptmetheus supports multiple providers and reportedly over 150 models.

3. Can teams collaborate using Promptmetheus?

Yes — it provides team/shared workspaces, “shared prompt libraries”, real‑time collaboration and user/role management in higher plans.

4. Are inference/LLM costs included in the subscription?

No. The subscription covers the IDE/tooling; you will still need your own API keys and will incur the inference costs of the models you use.

5. Can I export prompts/results or deploy as an endpoint?

Yes — Promptmetheus allows exporting data (JSON, CSV, XLSX) and supports deploying prompts as endpoints (AIPI) in higher plans.